SVM .net with CUDA – KMLib

KMLib – SVM .net implementation

KMLib – Kernel Machine Library is free and open source CUDA SVM in .Net, it contains few implementation of Support Vectors Machine algorithms in C# language (.NET v4.0).

pyKMLib – Python SVM with CUDA(GPU) support – multiclass CUDA SVM solver for sparse problems. KMLib child project.

Download pyKMLib from GitHub

Key Features

- .Net implementation

- Parallel kernel implementation

- SVM CUDA acceleration – kernels and solver

- CUDA SVM with sparse data formats: CSR, Ellpack-R, Sliced-Ellpack

- For non commercial and academic use: Free MIT license when use please cite: Bibtex CUDA SVM CSR

Description

The main goal of this library is extensibility and speed. Firstly, you can use popular:

- RBF kernel,

- Linear,

- Polynomial,

- Chi-Square kernel

- Exponential Chi Square kernel

- …and easily plug in you custom kernels, by implementing IKernel interface.

Secondly, it implements few CUDA GPU SVM solvers with different kernels, each utilize sparse data formats like CSR[CuCSRSVM2012 ], Ellpack-R and Sliced-Ellpack-T[in print] in order to allow classification of bigger datasets.

- Linear – CSR,

- RBF - - CSR, Ellpack-R, Sliced EllR-T

Besides, KMLib implements many popular and few experimental SVM solvers:

- SMO Platt solver,

- LibSVM implementation – with parallel support for many core computations (efficient and thin implementation with .net Task class and Paraller.For)

- CSR-GPU-SVM solver with sparse matrix format CSR(Compact Sparse Row) – this allows to classify big and sparse datasets like 20newsgroup.binary (~10x speed up on GeForce 460 in comparision to LibSVM)

- GPU SVM Ellpack-R [see article Tsung-Kai et al "Support Vector Machines on GPU with Sparse Matrix Format"]

- GPU SVM with Sliced EllR-T [see article in print Sopyla GPU Accelerated SVM with Sparse Sliced EllR-T Matrix Format]

- Specialized Linear SVM solver based on LibLinear library

- Multi class solver – one agains all

- Specialized Linear SVM – with stochastic gradient solver – based on Bottou implementation SGD

- Specialized Linear SVM – with Barzilai-Borwein gradient solver

- Specialized Linear SVM – with stochastic gradient solver with Barzilai-Borwein update step

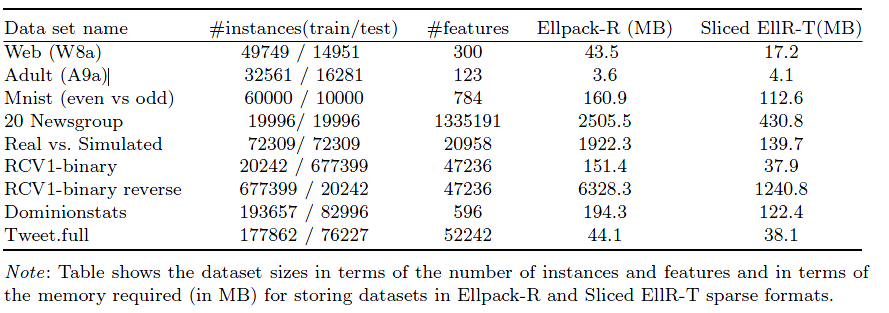

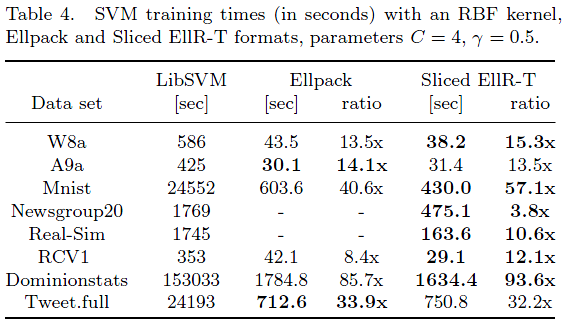

Some results GPU SVM with RBF kernel in sparse formats, data from LibSVM Dataset Repository

How to use

Look into KMLibUsageApp project for detail.

Simple classification procedure

- Read dataset into Problem class

- Create the kernel

- Use validation class which does

Code should look like this:

//1. Read dataset into problem class, a1a dataset is available // at http://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/binary.html#a1a Problem train = IOHelper.ReadDNAVectorsFromFile("a1a", 123); Problem test = IOHelper.ReadDNAVectorsFromFile("a1a.t", 123); //2. Choose and then create the kernel, RBF kernel with gamma=0.5 IKernel kernel = new RbfKernel(0.5f); //3. Use validation class, last parameter is penalty C in svm solver double tempAcc = Validation.TestValidation(train, test, kernel, 8f);

Project and CUDA Kernels build procedure

In Visual Studio 2010 in solution configuration manager it is necessary to set Active solution platform to x86 or x64, when „Any CPU” is set than project will not be build properly

In KMLib.GPU project properties section „Build Events”->Post-build event command line there are few commands:

xcopy "$(TargetDir)CUDAmodules\*.cu" "$(TargetDir)" /Y del *.cubin nvcc cudaSVMKernels.cu linSVMSolver.cu rbfSlicedEllpackKernel.cu gpuFanSmoSolver.cu --cubin -ccbin "%VS100COMNTOOLS%../../VC/bin" -m32 -arch=sm_30 xcopy "$(TargetDir)*.cubin" "$(SolutionDir)Libs" /Y

- copy all *.cu files(CUDA code) from CUDAmodules folder into target folder(usually Release folder)

- delete old *.cubin files (compiled CUDA kernels)

- compile all cuda modules,

- -m32 or -m64 – indicates 32 or 64 bit architecture

- -arch=sm_21 or -arch=sm_30 – indicates compute capability, former if for Fermi cards(e.g Geforce 470), latter is for Kepler cards (e.g. GeForce 690), it is very important to set this switch depending on yours card compute capability

No comments yet.

Leave a comment

No trackbacks yet.